Disable Cloudflare AI-Bot Block and Let GEO-Targeted Traffic Flow

(“GEO traffic” here = Generative-Engine-Optimised traffic from AI assistants like ChatGPT, Claude, Perplexity, and Gemini.)

Open your server logs this week and you’ll spot a pattern: GPTBot, ClaudeBot, PerplexityBot, Google-Extended—knocking, being turned away, then replaced by third-party summaries of your own content.

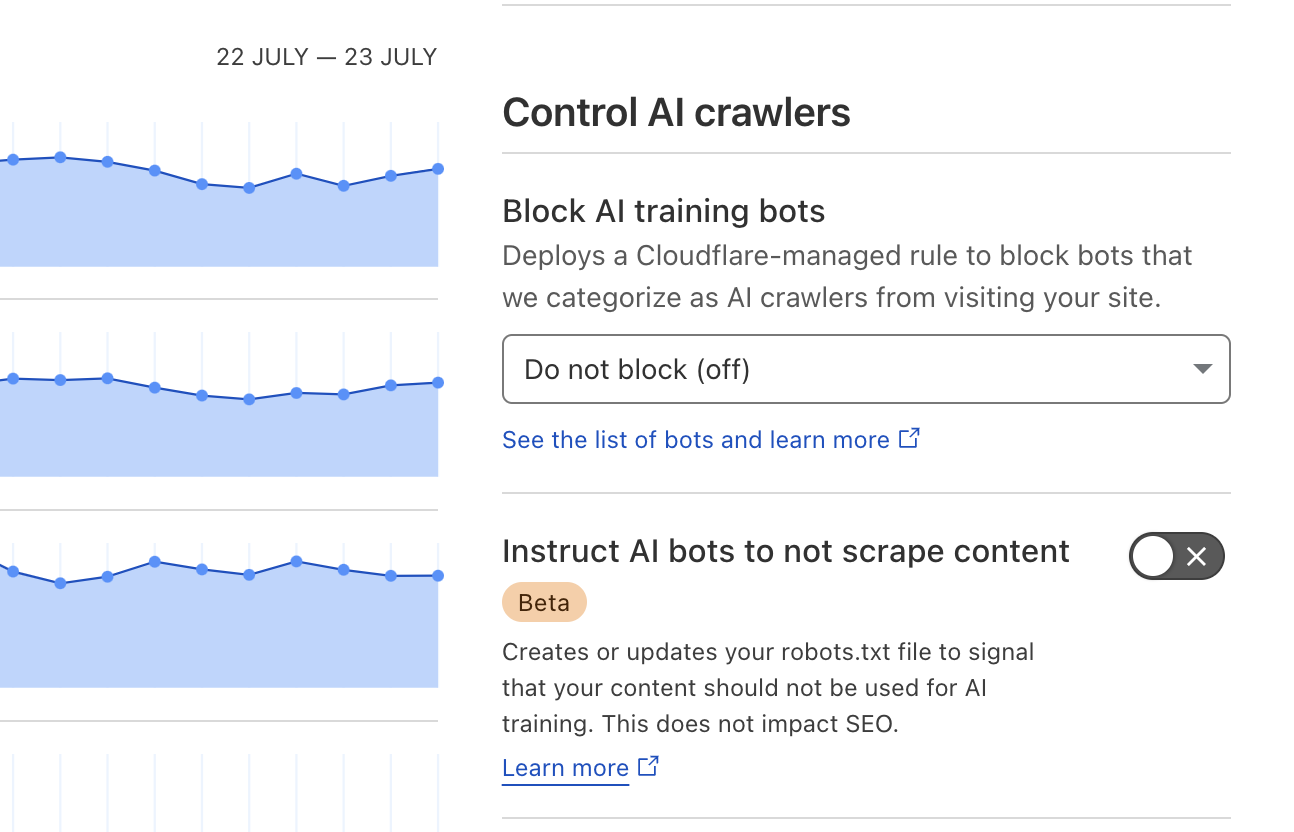

If you’re running through Cloudflare, odds are you didn’t block them on purpose. A single toggle—“Block AI Scrapers”—ships enabled in Bot Fight Mode. It promises to save bandwidth and protect content, but in practice it strangles what we’ll call GEO traffic (Generative-Engine-Optimised traffic): citations and referral clicks from AI assistants that now answer a billion queries a day.

When Cloudflare serves a 403, ChatGPT falls back to whatever it can index elsewhere: product-hunt blurbs, out-of-date reviews, or competitor write-ups. You lose control of the narrative, and—more painfully—the link that would have driven qualified visitors straight to your site.

This article is a two-minute fix with a six-figure upside. We’ll show you exactly how Cloudflare’s setting works, why letting reputable AI crawlers in is the single easiest SEO win of 2025, and how to flip the switch so your content becomes the citation instead of the footnote. The AI gold rush is underway; don’t guard the gates so tightly that opportunity walks past.

What “GEO Traffic” Really Means

Generative-Engine-Optimised (GEO) traffic is the stream of visitors that arrive after your content is cited inside AI assistants—ChatGPT “Browse,” Gemini snapshots, Perplexity answers, Microsoft Copilot sidebars, even smart-speaker responses. When GPTBot or ClaudeBot crawls a page, the text and links flow into a vector store that powers these answers. Each time the model surfaces your paragraph with a live link, a percentage of users click through.

Why this matters in 2025: server-log studies show reputable AI crawlers now account for 20-30 % of classic Googlebot volume on tech and SaaS sites. That slice is growing ~5 % month-over-month, while traditional organic clicks inch upward only 1-2 %. Miss GEO traffic today and you forfeit tomorrow’s discovery channel as models solidify their training snapshots.

Typical citation path:

-

GPTBot fetches your show-note or blog page →

-

Text is embedded and stored →

-

A user asks a question →

-

The model retrieves your snippet, cites the URL →

-

User clicks → you gain a high-intent visitor.

Block step 1 and the chain never starts.

How Cloudflare Accidentally Chokes AI Discovery

Cloudflare’s Bot Fight Mode ships with an innocuous-sounding toggle: “Block AI Scrapers.” Once enabled, any request matching GPTBot, ClaudeBot, PerplexityBot, or Google-Extended gets challenged or outright 403’d. Because the block happens at the edge, your origin logs may never record it—only Cloudflare analytics show a spike of 4xx responses to AI user-agents.

Why the toggle exists: Cloudflare is piloting a pay-per-crawl marketplace in which large LLM vendors purchase access tokens, and Cloudflare takes a 30-40 % cut—much like Apple’s App Store tax. In the meantime, the default setting shields content by denying non-paying AI bots. Great for their margins; catastrophic for your visibility.

Symptoms you’ll see

| Symptom | Where to Spot It | What It Means |

|---|---|---|

| Spike of 403s for GPTBot in Cloudflare logs | Security ▸ Events | AI bots blocked at edge |

| ChatGPT Browse cites 3rd-party summaries instead of your domain | Manual prompt test | Model couldn’t crawl your content |

| Perplexity “Sources” list omits you despite topical relevance | Perplexity answer panel | Index missed your page |

Technical proof

curl -I https://yourdomain.com/ --user-agent "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko); compatible; GPTBot/1.0" HTTP/2 403

Run the same curl with a normal browser UA; you’ll get 200 OK. The difference is Cloudflare’s AI-bot block.

Bottom line: leave the toggle on and you’re effectively setting Disallow: / for every AI crawler the web relies on. Flip it off, or create an explicit Allow rule for reputable user-agents, and GEO traffic can start flowing within 24–48 hours—before competitors realise why your site keeps showing up in chat answers while theirs fades to citation dust.

AI Crawlers You Do Want Inside the Gate

| Bot | Vendor | Why You Want It | Official User-Agent String* |

|---|---|---|---|

| GPTBot | OpenAI | Feeds ChatGPT answers and link citations. | Mozilla/5.0 … GPTBot/1.0 |

| ClaudeBot | Anthropic | Powers Claude AI citations and real-time fetches. | Mozilla/5.0 … ClaudeBot/1.0 |

| PerplexityBot | Perplexity.ai | Builds Perplexity’s answer index (sources panel drives clicks). | Mozilla/5.0 … PerplexityBot/1.0 |

| Google-Extended | Supplies the Gemini LLM; separate from classic Googlebot. | Mozilla/5.0 (compatible; Google-Extended/1.0…) |

|

| BingBot (Copilot) | Microsoft | Crawls for both Bing search and Copilot AI responses. | Mozilla/5.0 … bingbot/2.0 |

*Ellipses (…) indicate standard browser strings preceding the bot token.

Step-by-Step — Disable Cloudflare’s AI-Bot Blocking

-

Log in to Cloudflare Dashboard

Choose the domain you want to fix. -

Navigate:

Security ▸ Bots -

Locate “Block AI Scrapers” Toggle

It sits under Bot Fight Mode. Turn it OFF. -

(Optional but safer) Add an Explicit Allow Rule

-

Security ▸ WAF ▸ Custom Rules ▸ Create -

Expression:

(http.user_agent contains "GPTBot") or (http.user_agent contains "ClaudeBot") or (http.user_agent contains "PerplexityBot") or (http.user_agent contains "Google-Extended") or (http.user_agent contains "bingbot") -

Action: Skip → Bot Fight Mode, Managed Challenge

-

-

Purge Cache

Caching ▸ Configuration ▸ Purge Everythingso bots fetch fresh 200 responses. -

Verify

curl -I https://yourdomain.com/ \ -A "Mozilla/5.0 AppleWebKit/537.36; compatible; GPTBot/1.0"Expect

HTTP/2 200, not403.

Total time: ~2 minutes. Result: AI crawlers can finally read and cite your pages.

Robots.txt for an AI-First SEO Posture

User-agent: * Allow: /

That’s it. A blanket allow ensures all reputable bots—search and AI—can access every public URL. Partial or legacy Disallow: lines break modern indexation because:

-

AI bots often lack special rules for sub-directories; a stray

Disallow: /apican cascade into full denial. -

Future crawlers inherit the same rules; your “temporary” block becomes permanent training data exclusion.

If you must throttle bandwidth, use Cloudflare rate-limiting or WAF, not robots.txt, so you maintain crawl visibility while controlling load.

Open gate, verify 200s, let GEO traffic flow.

FAQ — Cloudflare, AI Bots, and Blocking

Q 1. Cloudflare’s “Bot Fight Mode” is on, but I don’t see any errors in my server logs—why?

Cloudflare blocks GPTBot and friends at the edge, so the 403 responses never reach your origin. Check Cloudflare Dashboard → Security → Events or run a curl test with the bot’s user-agent; that’s where the hidden blocks surface.

Q 2. Will allowing GPTBot spike my bandwidth bill?

A full GPTBot crawl is lightweight—HTML only, no images, no CSS, no JS execution. For a 500-page site it’s typically < 30 MB per month, far below the 100 MB Cloudflare free-tier egress allowance.

Q 3. Could unblocking AI crawlers expose private or paid content?

Only if the URLs are publicly reachable. Keep premium PDFs or member videos behind authentication headers; GPTBot obeys HTTP 401/403 just like Googlebot. Robots.txt is not a security feature.

Q 4. Does Cloudflare’s “Verified Bot” list include AI crawlers?

No. GPTBot, ClaudeBot, and PerplexityBot are not on Cloudflare’s verified list yet, so they fall into the generic “AI Scraper” bucket that gets blocked when the toggle is on.

Q 5. What about sketchy, bandwidth-draining AI scrapers?

Create a WAF rule to allow only reputable user agents (GPTBot, ClaudeBot, PerplexityBot, Google-Extended, bingbot) and rate-limit everything else. You stay open for citations but guard against unknown harvesters.

Q 6. If I unblock today, how fast will AI assistants start citing me?

GPTBot revisits popular or recently updated pages within 24–72 hours. ChatGPT Browse can display new citations a day or two later. Less-trafficked pages may take a week or more.