Google AI Overviews cause massive drop in search clicks

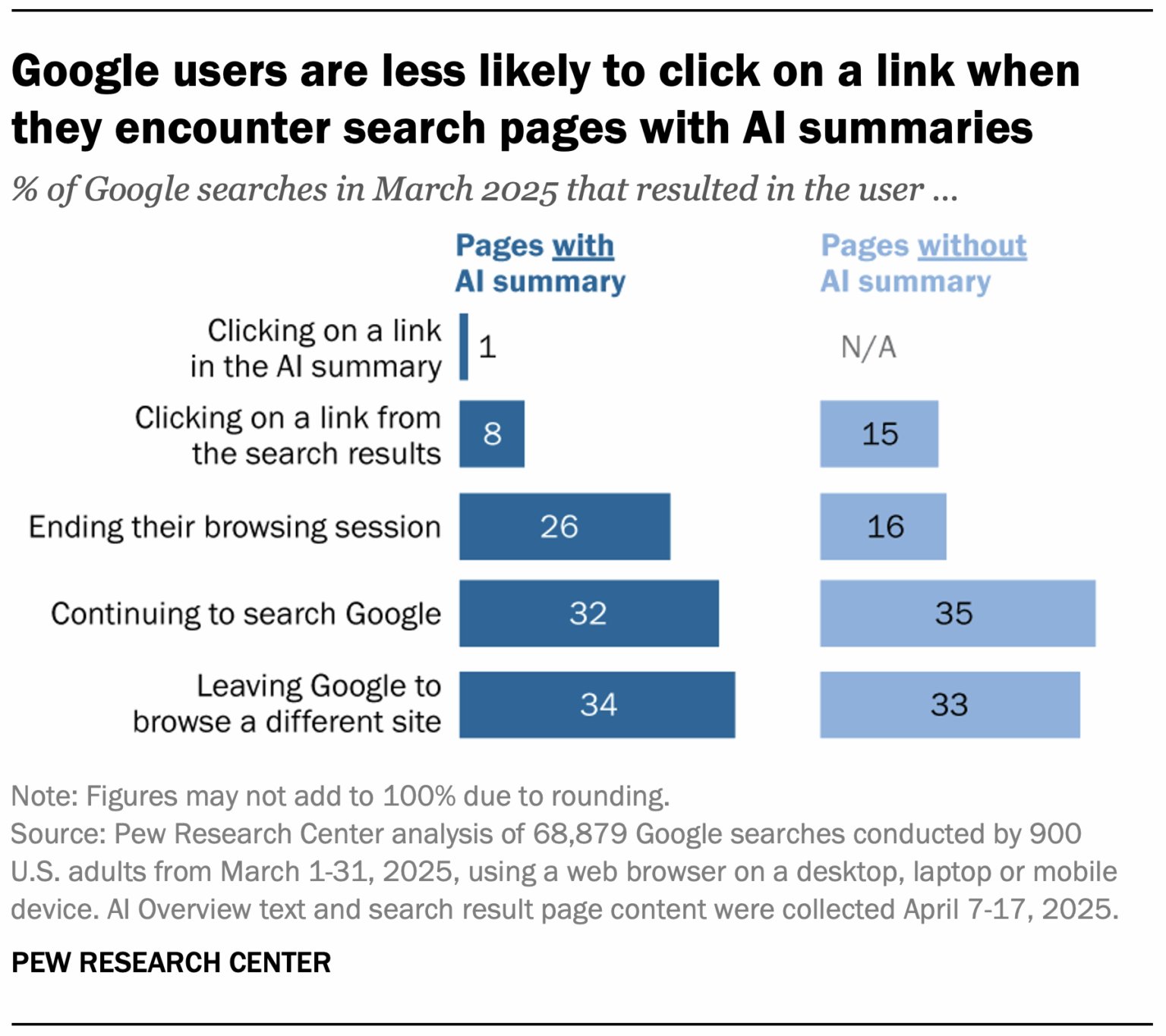

Picture a search results page where just one extra panel — the pastel‑boxed AI Overview — causes nearly half of your potential visitors to vanish. That’s the reality Pew Research Center surfaced when it tracked 900 real users in March 2025. On a conventional Google page, about 15 percent of people clicked through to a website. Add an AI Overview, and the click‑through rate cratered to 8 percent.

The math is brutal: for every thousand searches, you’ve just lost seventy clicks—eyeballs that would have landed on your articles, storefronts, or sign‑up pages. Worse, Pew found that the AI tile itself rarely drives traffic back to sources. Barely 1 percent of summaries produced a click on a citation, and when they did, the winners were the giants—Wikipedia, YouTube, Reddit—leaving niche publishers, SaaS docs, and indie blogs out in the cold.

Why does it sting so much? Because AI Overviews aren’t a fringe experiment anymore. Google now injects them in roughly one out of every five queries, and the prevalence climbs to 60 percent when a search is worded as a question. That means any brand relying on informational keywords is fighting an algorithm that increasingly answers before it refers.

If your content strategy still assumes the blue‑link era, these numbers are your wake‑up call. The next sections break down how Pew ran the study, where Google’s rebuttal falls apart, and—most importantly—what publishers can do to reclaim the clicks drifting into the AI void.

What Are Google AI Overviews?

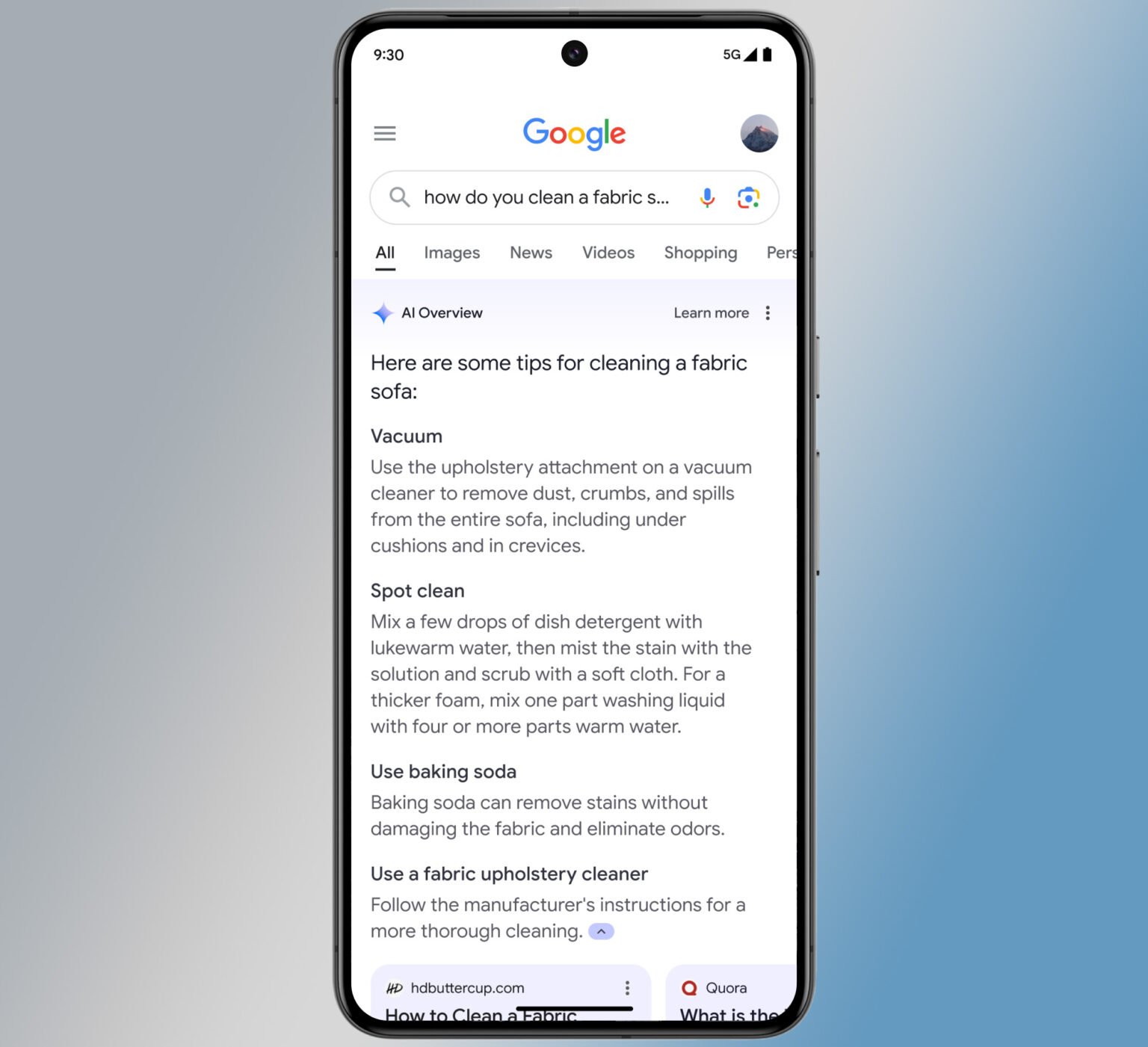

Google’s AI Overviews sit atop the results page like a super‑charged featured snippet, pulling text from multiple sites and generating a conversational answer. The feature began as Search Generative Experience (SGE) in May 2023, visible only to volunteers in Google’s Labs. Over the next twelve months, the company expanded SGE, testing follow‑up question chips, inline shopping links, and citation cards.

In May 2024, Google dropped the “Labs” label and promoted AI Overviews into the default U.S. search interface—initially on a narrow set of product and how‑to queries. Each month afterward, the coverage widened: recipes in August 2024, health questions in November, travel planning in early 2025. By July 2025, internal tracking firms estimated AI Overviews appeared on roughly 20 percent of all desktop searches, with the likelihood soaring to 60 percent for question‑style and multi‑sentence queries. In effect, the AI tile has become a second front page—one that often answers before users ever reach the blue links.

Inside the Pew Research Methodology

To quantify the impact, the Pew Research Center partnered with Ipsos’s KnowledgePanel, equipping 900 U.S. adults with a browser plug‑in that logged every Google search they ran in March 2025. The plug‑in recorded:

-

Query text and length (single word, phrase, or full question).

-

Presence or absence of an AI Overview on the resulting SERP.

-

User interactions—scroll depth, link clicks, and whether the session ended on the SERP.

Pew then compared click‑through behaviour on pages with versus without the Gemini‑generated panel. Their headline finding: pages that showed an AI Overview cut outbound clicks nearly in half—from 15 percent down to 8 percent. Even more striking, only 1 percent of all AI Overviews produced a click on any cited source.

Google’s PR team dismissed the study, arguing the sample size and “skewed queryset” misrepresent overall traffic. Pew counters that its opt‑in panel methodology is identical to the one researchers have trusted for election polling and media‑consumption studies for two decades. While 900 participants cannot mirror Google’s billions of daily searches, the data are directionally consistent with site‑level analytics many publishers have reported since Overviews rolled out.

In short, the Pew project captures real‑world user behaviour rather than Google’s internal click accounting—and it paints a troubling picture for any publisher who depends on organic traffic.

Key Findings at a Glance

Pew’s dataset turns a year of hand‑waving speculation into hard numbers. First, the headline metric: when an AI Overview appears, the likelihood of any organic click on that SERP falls from 15 percent to 8 percent—almost a 50 percent haircut to the open‑web economy. Second, the promised “traffic dividend” from citations barely exists. Out of thousands of AI tiles logged, just 1 percent produced even a single click on a cited source link. Put differently, ninety‑nine times out of a hundred the AI answer satisfies (or stalls) the user before a publisher can claim so much as a page‑view. Third, question‑style searches—the bread‑and‑butter of informational content—are exactly where the robot intervenes: 60 percent of queries phrased as questions now trigger an AI summary. Finally, the spoils are concentrated. Three destinations—Wikipedia, YouTube, and Reddit—account for 15 percent of all citations the model makes, leaving the remaining 85 percent to be divided among every other site on the internet.

Winners & Losers: Who Gets Cited?

Pew’s citation log reads like a popularity contest skewed toward scale and community muscle. Authority encyclopedias (Wikipedia) and user‑generated behemoths (YouTube videos, Reddit threads) dominate because they offer expansive, continuously updated content pools the model can trust statistically. Mega‑publishers with DR 90+—think Mayo Clinic, The New York Times, and Investopedia—show up often enough to keep their brand equity intact.

The casualties sit at the long tail: niche newsrooms, specialist SaaS blogs, academic journals behind paywalls, and local outlets. These sites rarely appear in the AI panel, and when they do, users almost never click through. Even when a smaller publisher provides the original scoop, Gemini often paraphrases it and attributes to a higher‑authority roundup or a crowd‑sourced forum.

For marketers, the takeaway is brutal but clear. If you’re not already in the citational “inner circle,” relying on traditional blue‑link SEO alone won’t protect your traffic. The strategy frontier shifts to structured data, concise answer blocks, and explicit invitations for AI crawlers—all aimed at convincing the model you deserve one of the few outbound links it still doles out.

SEO Best Practices in an AI‑Dominated SERP

Google’s new interface rewards sites that feed its models clean, verifiable data—and quietly sidelines the rest. Three priorities now separate the winners from the traffic orphans.

1. Welcome the Bots That Write the Answers

Blocking GPTBot, ClaudeBot, Perplexity‑Bot, or Google‑Extended is the modern equivalent of adding noindex to your homepage. Leave reputable AI crawlers unmolested in robots.txt (User‑agent: * Allow: /) and disable any “Block AI Scrapers” toggle in Cloudflare or your firewall. The crawl cost is pennies, but every successful fetch becomes training fuel that can surface your paragraph—complete with a link—in the next billion chat queries.

2. Serve a Fact‑Checked Answer Box on Every Page

AI Overviews pluck concise statements, numbered steps, and bullet‑point summaries. Give them exactly that: a 40‑ to 60‑word answer block near the top of informational articles, wrapped in an <h2> like “Quick Answer.” Make it verifiable—cite the study, include the year, link to the primary source—and keep promotional fluff out. Google’s ranking systems (and its LLM) both gravitate toward text that is direct, data‑anchored, and attribution‑friendly.

3. Double‑Down on Brand Queries and E‑E‑A‑T

If generic clicks are drying up, protect—and expand—the traffic you own outright: searches that include your brand name. Optimise title tags and meta descriptions to pair the brand with core topics (“AcmeLabs Vitamin‑C Serum Review & Science”). Bolster E‑E‑A‑T by adding author bios with credentials, linking to peer‑reviewed citations, displaying ISO or GMP certifications, and maintaining a transparent “Last Reviewed” timestamp. The stronger your perceived authority, the more likely Google’s AI will quote you instead of a forum thread.

Conclusion — Adapt or Watch Traffic Evaporate

Pew’s numbers are the canary in the SERP: clicks are evaporating wherever AI answers appear, and the coverage area is only widening. Sites that cooperate with reputable crawlers, package their expertise in citation‑ready formats, and fortify brand authority will still earn visibility—both in traditional blue links and inside the AI tiles themselves. Everyone else risks becoming background training data, remembered by the model but never visited by the user. Adapt your SEO playbook to this AI‑first reality now, or prepare to measure your future audience in impressions instead of clicks.